After a long hiatus, have started writing again., however have moved the blog to GitHub. The new URL is:

After a long hiatus, have started writing again., however have moved the blog to GitHub. The new URL is:

Filed under strategies

With market making we can try to be neutral by skewing prices in such a way as to maintain a neutral position. To the extent that the market can become 1-sided (in momentum) or may have large sized requests (if offering at different sizes), one’s portfolio may require explicit hedging.

In a live market-making scenario we can determine how we want to hedge on a case-by-case basis and with a view on where it is cheapest to achieve the hedge. Within a FX portfolio there is opportunity to hedge an excess position in one currency with a position in one or more other currency pairs, potentially taking on some basis-risk.

An interesting inverse problem was posed by a colleague of mine. Supposing one knows the net positions of a portfolio at each time-step historically and want to back out the most conservative view on:

This problem cannot be solved definitively as any view on hedging or risk assumes a model of forward price dynamics. However, I thought a reasonable way to approach this would be to:

Mean Reversion Model

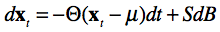

There are a variety of choices for mean-reversion model. Some of the simplest are:

Regardless of which formulation we start with, can express hedges as a combination of scaled portfolios on subsets of assets in x. That is, can determine sparse weighting vectors β0, β1, …, βn such that each linear combination of βix is mean reverting and possibly cointegrating. Note that one can have multiple mean-reverting vectors that include the same asset both because there are different periodicities in mean-reversion and because of the cross-relationships between assets.

For example in a portfolio containing EUR, JPY, CHF, one (cointegrating) weight vector would be: [ 1.0, 0.0, -1.0 ].

Optimisation Problem

Assuming we have:

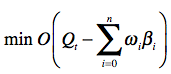

The goal is to find the smallest net portfolio after subtracting scaled mean-reverting vectors ωiβi, over some objective function O(q):

The most appropriate objective function is one that expresses the residual risk of the portfolio. For simplicity, defining the risk objective to be to find the residual portfolio with a minimum covariance matrix volume (i.e. determinant). The intuition is that a covariance matrix with highly aligned (correlated) vectors will have smaller volume. Likewise, lower variance will reduce the magnitude of the vectors (and therefore volume).

Given a covariance matrix on unit positions ΣΔT, can scale the covariance matrix given residual position vector q as:

and hence determine the objective function O(q) to be the determinant of the residual covariance:

putting it all together, we want to minimize:

Given a minimizing vector ω, we can determine the residual, unhedged position at time step t to be:

Solution

The optimization problem is similar to the packing problem, where we try to determine how many units of each item, where we have K distinct item types (our beta vectors), optimally fill a bag.

In our case, the items to be packed are the beta vectors and the bag is the position we are trying to reduce. We are trying to determine how many units of each beta vector to use in combination with other beta vectors to achieve the best reduction (packing).

To reduce the combinatorial possibilities to something finite, we can assume that only certain scalings of the beta vectors are used. For a FX portfolio, the market tends to trade in multiples of 100K on one of the currencies (usually the base currency). In equities, the convention would usually be units of 100.

We can then solve this with a combinatorial approach (exponential) or approximately with either a greedy algorithm (polynomial) or meta-heuristic optimization approach.

Addendum

I should mention that there are many approaches that can be taken in evaluating portfolio risk. Gary Basin suggested looking at a PCA decomposition of the portfolio. PCA is definitely a useful way in determining the primary determinants of variance in the portfolio. I chose to use a method that allowed me to factor out mean-reverting sub-portfolios. There are so many approaches to this, can’t really comment on what is best.

Filed under strategies

Investors are often looking for uncorrelated returns so as to better diversify. If one looks at world indices & equities, there is much less diversity between assets than there was a decade ago, indeed the cross-market correlations are remarkably high.

On the other hand, from a trading perspective, generally want to be able to reduce the risk by hedging or spreading against related assets. For example in FX, when market making the G10 currencies, one typically offsets inventory risk with a position in another highly correlated currency or portfolio of currencies.

With that in mind let’s look at bitcoin (BTC) vs a variety of ETFs representing FX, IR, world indices, and commodities.

Below have computed 1day, 5day, and 15day return correlations across BTC + variety of FX, IR, Commodities, and world indices. To reduce the impact of outliers (return spikes), made use of my Minimum Covariance Determinant covariance implementation.

IR & FX

Commodities

World Indices

All Together (1day, 5days, 15days)

The VECM model can be used to express the co-movement of related assets across time, formulated as the change in asset prices as:

Δx[t] = δ0 + δ1 t + δ2 t2 + … + Π x[t-1] + Φ1 Δx[t-1] + Φ1 Δx[t-2] + … + ε

or alternatively can be formulated as the VAR model:

x[t] = δ0 + δ1 t + δ2 t2 + … + Γ1 x[t-1] + Γ2 x[t-2] + Γ3 x[t-3] + … + ε

The two variations of cointegration will look at are:

Finding cointegrated assets that do not drift away from each other, even “deterministically”, over time is far preferable to time-based drift. I was not able to find any trivially cointegrated assets in my sample set, however, there were a number of assets with strong “type 2” cointegration. Here is one of a number that showed 95% confidence:

BTCcoint1 = Johansen (Pbtc14[['BTC','IEF']], p=1, k=1) BTCcoint1.critical_trace

BTCcoint1.eigenvectors

| 0 | 1 | |

|---|---|---|

| BTC | 0.037677 | -0.019919 |

| IEF | 0.999290 | 0.999802 |

The key linkages between Bitcoin and other assets will be driven by investor trading patterns as opposed to fundamentals at this point. Should Bitcoin become more of a transactional “currency” may start to see more fundamental linkages.

I am dusting off some code from a few yrs ago to create a rolling view on spread asset ratios. Need to rework this and apply as pairs / basket strategy. Will post some results at a later point in/out-of-sample.

Filed under strategies

I have 4 Bitcoin L3 exchange feeds running smoothly out of a data center in California (which is slightly closer to Asian exchanges and Coinbase than the east coast). It took a bit of error handling and exponential back-off, to handle the unreliability of connectivity with these exchanges, where connections can intermittently be overwhelmed (returning 502 / 503 errors due to the poor choice of a REST-based API).

I am thinking to add Bitstamp and Kraken to the mix, even though they are smaller. Bitstamp seems to have recovered somewhat since its security breach and Kraken is unique due to its EUR denominated trading.

HFT Opportunity

Bitcoin trading & order volume is quite far from the hyper-fast moving equities, FX, or treasuries markets. That said, it has significant potential for market makers and short-term prop-trading given the greater transparency of microstructure in this market. The caveat to this is that the transaction costs on many exchanges are enormous (10+bp or 20+bp round-trip), though for BTCChina is surprisingly free (fees are on withdrawal).

I am really at the beginning of collecting HFT-style data for the main exchanges, so while I have tested a couple of signals on very small samples, want to collect a larger data set for backtest and fine-tuning. The 2 signals have tested are around short-term momentum detection, so can either use to follow momentum or use as a risk-avoidance in a market making engine. In the later case would remove my offer from one side of the market if detect 1-sided direction, avoiding adverse selection.

Restrictions

Some marketplaces look to put in measures to keep their marketplace sane for lower-freq / non-HFT specialized traders. In looking at the Kraken API today, noticed the following:

We have safeguards in place to protect against abuse/DoS attacks as well as order book manipulation caused by the rapid placing and canceling of orders …

The user’s counter is reduced every couple of seconds, but if the counter exceeds 10 the user’s API access is suspended for 15 minutes. The rate at which a users counter is reduced depends on various factors, but the most strict limit reduces the count by 1 every 5 seconds.

So effectively Kraken will allow for a small burst of order adjustments or placements, but only allows an average of 1 adjustment / 5 sec. For a market maker 1 every 5 seconds (or 2 sides every 10) is too limiting. This can become a problem for both market makers and trend / momentum followers if the market is moving. Perhaps this limit should scale with respect to price movement or be combined with a higher limit + minimum TTL (time to live).

I am actually for certain restrictions in the market. The equities market, in particular, needs to be cleaned up, not with new taxes or ill-conceived regulation (reg-NMS for example), but with:

#2 and #3 would remove many of the games on the exchange that do not serve the market.

Filed under strategies

I’ve been a bit distracted recently brainstorming some blockchain-related ideas with colleagues, and working on a research & trading UI.

OxyPlot

First I wanted to give a plug for OxyPlot. If you use F#/C# or the .NET ecosystem, OxyPlot is a well designed interactive scientific plotting library. The library renders to iOS, Android, Mono.Mac, GTK#, Silverlight, and WPF. If one is dealing with a manageable amount of data, highly recommend Bokeh & python. However if you need significant interactive functionality or need to interact with large data sets, OxyPlot is a great solution:

I wanted to build a UI to allow me to observe and develop better intuitions around order book characteristics. OxyPlot did not have good financial charts, so contributed high-performance interactive candlestick & volume charts that can handle millions of bars (points) and some pane alignment controls to the project. Here is an example of the sort of UI one can put together with OxyPlot:

I wanted to build a UI to allow me to observe and develop better intuitions around order book characteristics. OxyPlot did not have good financial charts, so contributed high-performance interactive candlestick & volume charts that can handle millions of bars (points) and some pane alignment controls to the project. Here is an example of the sort of UI one can put together with OxyPlot:

Feed Status

I was awaiting a reply from BTCChina regarding their broken FIX implementation. As the API issue has not been resolved, implemented the BTC China REST API and deployed the feeds for the 4 exchanges of primary interest:

Though 2 of the exchanges require 4-sec sampling (due to the lack of streaming APIs), all intervening trades are captured between queries. For L2 sources, order book transactions are implied by looking at the minimum sequence of transactions that would produce the difference between 2 successive snapshots of the order book. All of the feeds yield a common transaction format:

Filed under strategies

Ok, what I am going to say here is probably Bitcoin heresy, in that I am going to advocate more centralized clearing and management of assets wrt exchange trading.

I want to be able to scale trading in bitcoin and execute across multiple exchanges. However have the following problems

Solution

I want to keep my “working” fiat & bitcoin assets for trading with a trusted agent (a “Prime Broker” in the traditional trading space) who will grant me credit across a fairly wide range of exchanges. To completely isolate both the trader and the prime broker from exchange risk:

Case in point, exchanges such as BTC-e trade BTC/USD at a 100-150bp discount to bitfinex. Why? I think this due to the perceived credit risk of depositing funds with BTC-e and possibly issues in moving assets to net.

Perhaps there is an even more interesting angle, PrimeBrokerage V2, using the bitcoin ledger, ascertaining trust, etc. But for the moment, taking exchange risk out of the equation would open up the market further.

Advantages

Above and beyond solving the trust issue, more centralization or a way to coordinate amongst exchanges could help with:

Filed under strategies

I have implemented 4 bitcoin exchange interfaces now that produce a live L3 stream of orderbook updates + trades of the form:

Given the above, can reconstitute the orderbook as it moves through time, and can likewise be used to create BBO quotes and bars of different granularities. The status of the exchange implementations is:

I am looking to run this on a remote machine (preferably linux) and write to an efficient hierarchical file-based tick DB format that I use for equities, FX, and other instruments. Have not yet decided on a hosting service yet (welcome suggestions).

I am happy to share the collected data, though if becomes too burdensome, may need to find a way to host and serve it properly.

Once I get this running on a host, collecting data, want to get back to analysis of signals. Will revisit new exchange implementations later.

Notes

[1] Bitfinex does not yet have a streaming API, so am polling the orderbook on a 4sec sample and determining the net transactions between snapshots. Though the orderbook is sampled, no trades are missed, as queries relative to the last trade seen. Expect that will see a new exchange implementation from AlphaPoint sometime later this year.

[2] BTCChina is implemented, however their FIX responses are not providing the documented data, so awaiting a solution from them.

Filed under strategies

In the previous post outlined intention to put together high quality L2/L3 feeds for the top 4-5 bitcoin exchanges, collect L3 data, and provide a consolidated live orderbook for trading. So far have implemented OKCoin and been experimenting with the others to determine their API capabilities.

With the exception of OKCoin, what I’ve found so far is not good. Here is a summary of the top-4 exchanges w/ respect to market data APIs (I also included Coinbase with the notion will become a top player):

BTCChina is, by far, the largest exchange, however appears to have shoddy technology (at least on the market data side). They implemented FIX 4.4 last Nov, however is broken in that requests for full OB fail to work as documented (if anyone has had any success with this, please let me know). BTCChina has an alternative public WebSocket/JSON API which provides just 5 orderbook levels. However, there appears to be a “secret” API which provides full depth-of-book, as real-time UIs ( such as bitcoinwisdom) show activity beyond 5 levels (if anyone knows how this works, please let me know). Addendum: I have reached out to BTCChina, hopefully they will address the FIX depth-of-book subscription issue and not treat it as a feature.

OKCoin is the 2nd largest exchange by volume and appears to have the most active orderbook of the lot. The good news is that its FIX API works as documented. Activity-wise, have been receiving 20+ transactions every 100-300ms or 15ms between transactions on average. This degree of activity rivals more traditional markets. That said, have suspicions that the exchange or a market-making partner (with 0 transaction cost) is constantly pumping both sides of the market in the 1st 3-5 levels of the orderbook. The pattern I saw was very shallow orders (1/10th BTC) on the 1st 3-5 levels of both sides of the market getting swept on either side alternatively.

Bitfinex seems to be the most popular BTC/USD exchange, and hence cannot be ignored, even though has a ill-advised market data API. They are beta-testing AlphaPoint’s “modern” exchange implementation. Once that hits production should expect both FIX and binary streaming feeds. I don’t know whether these will provide a L2 (depth of book) or L3 (order transactions) view of the exchange. Either would be very welcome.

BitStamp has fallen dramatically in terms of its share of BTC/USD volume. Given its troubles, I wonder whether it will survive. Though it has a “secret” API providing L3 data, the technology backing the exchange is suspect. In particular, its matching engine has both improper matching semantics (documented on reddit) and is very slow, where sweeping a few levels can take seconds to execute. Short of Bitstamp reinventing itself both in terms of technology and trust, the question is then, who will replace them as the #2 presence in the BTC/USD market?

Coinbase or the yet-to-be launched Gemini, may be the successor to Bitstamp in terms of market position (or perhaps even take on Bitfinex). With respect to market data, it provides a complete list of orders in the orderbook. Update: Coinbase has a streaming API & with level 3 data, my mistake. However this must be queried by polling their REST/json API. As you can imagine, this is not a scalable approach – the # of orders will grow over time to the point where the message size will be overwhelming on a periodic frequency. The right solution is a streaming API with order transaction “deltas” {new order, del order, update order, etc}. The most basic design, scale, & accessibility mistakes have been repeated, and on a high profile exchange launch (regulated, NYSE-backed, etc).

Chinese Exchanges

It is hard to know what is real with respect to volume & dealability on the chinese exchanges. I do not have any trading experience with either OKCoin or BTCChina, however, the following has been noted from multiple sources (for example):

At least as a data source, I think these exchanges provide value. More ideal would be to find a way to use for trading, and avoid situations where unlikely to be able to execute. With respect to unresponsive crosses in larger price movements, the question is whether this is due to poor matching engine technology (where the OB may be overwhelmed)[1] or whether is being done intentionally “for the house”[2]. It seems very likely that the exchanges are trading on their own behalf in addition to providing exchange services.

Today there was also bad news, where a HK based exchange (MaiCoin) disappeared with $3B HKD worth of deposits from customers. I think there is enough fraud and poor market practices in the bitcoin space, that a certain level of regulation and regulated exchanges will be welcomed.

Conclusions (technology)

My overall impression with (most of) the exchange Bitcoin technology is that it has been designed by the typical full-stack web app developer, taking few learnings from traditional markets or applying common sense with respect to scale. Polling with REST/JSON is one of the dumbest ideas that is pervasive in Bitcoin exchanges (though for some uses is fine). With this sort of exterior interface / design decision, one has to wonder what other marvels are present behind the scenes.

I am sure that these will mature, and via competition, will converge towards more sophistication and sensible design. I would be happy to consult with these exchanges to move their APIs and implementations towards the state of the art, if for no other reason then to make these better venues for trading.

Notes

[1] Given the uninformed / ill-considered implementations have seen in the BTC space, would not be a surprise if most exchanges have deficient matching engines, with respect to order volume scaling. Certainly Bitstamp does.

[2] The “for the house” scam would be to intentionally delay and front-run: in a market upswing, delay buying flow, buy in front of the buyer flow and sell to the latent buyers at a higher price.

Filed under strategies

It seems like every other month there is a new bitcoin exchange. For the purposes of trading research & backtesting it is important to have historical data across the most liquid exchanges. My minimal list is:

(percentage volume sourced from http://bitcoincharts.com/charts/volumepie/). Each of these exchanges not only has a unique protocol but also unique semantics that need to be normalized.

For example, bitstamp produces the following sequence of transactions for a partial sweep of the orderbook. For example, here is a partial sweep, where a BUY 14 @ 250.20 was placed, crossing 4 orders on the sell side of the book:

In Bitstamp, would see the following transactions:

In Bitstamp, would see the following transactions:

The oddity here is that many market data streams & orderbook implementations will just transact the crossing in 1 go, so one will usually only see: DEL, TRADE, DEL, TRADE, DEL TRADE (and deletes may not be sequenced between the trades either). Where it gets odd is in replaying this data in that a typical OB implementation will sweep the book on seeing the order right away without intermediate UPDATE states. In such an implementation, seeing UPDATE to non-0 size after crossing and deleting the order completely might be seen as an error or a missed NEW, since the order is no longer on record in the OB.

Another note is that Bitstamp does not indicate the side of the trade (i.e. which side aggressed), though this is uncommon in markets such as equities or FX, Bitcoin exchanges do provide this. Fortunately because the initial crossing order is provided can use a bipartite graph (in the presence of multiple crossing orders) to determine the most likely aggressing order and therefore the trade sign.

Clearing House for Data

I would like to build and/or participate in the following:

It takes some amount of time to develop & fairly small amount of money to run in terms of hosting. Assuming there are not EULA issues in doing so, could perhaps provide data as a non-profit sort of arrangement. Not looking to build a for-profit company around this rather a collective where can give something back to the community and perhaps be able to make use of donated resources and/or data.

Filed under strategies

I guess I am old enough to have seen the wheel reinvented a number of times in both software infrastructure and financial technology spaces. Unfortunately the next generation’s version of the wheel is not always better, and with youthful exuberance often ignores the lessons of prior “wheels”. That said, we all know that sometimes innovation is a process of 3 steps forward and 2 steps backward.

Two examples that have impacted me recently.

HDFS

An example of a poorly reinvented wheel is Hadoop / HDFS in the technology space, where Amdahl’s law has been ignored completely (i.e. keep the data near the computation to achieve parallelism). When one writes to HDFS, HDFS distributes blocks of data across nodes, but with no explicit way to control of data / node affinity. HDFS is only workable in scenarios where the data to be applied in a computation is <= the block size and packaged as a unit.

“Everybody and his brother” are touting big data on HDFS, S3, or something equivalent as the data solution for distributed computation, in many cases, without thinking deeply about it. I was involved a company that was dealing with a big data problem in the Ad space recently. I needed to track & model 400M+ users browsing behavior (url visits) based on ~4B Ad auctions daily, relate each URL to a content categories (via classification & taxonomy of concepts), and for each user, then determine a feature vector for each user.

I argued that using HDFS for this data would be a failure with respect to scaling. The problem was that auction data, 4B records of <timestamp,user,url, …>, was distributed across N nodes uniformly, rather than on userid MOD N. HDFS does not allow one to control data / node affinity. My computation was some function over all events seen in a historical period for each user. This function:

needed to be evaluated for each user, across all events for that user and could not be decomposed into sub-functions on individual events reasonably. The technology I had to use was Spark over HDFS distributed timeseries. I argued that to evaluate this function for each user, on average, (N-1)/N of the requested data with need to be transported from other nodes (as only 1/N of the data was likely to be local in a uniformly distributed data-block scheme). The (lack of) performance did not “disappoint”, instead a linear speedup (N x faster), produced a linear slowdown (approaching N x slower due to the dominance of communication).

Bitcoin Exchanges

Bitcoin popularized a number of excellent ideas around decentralized clearing, accounting, and trust via the blockchain ledger. The core technology behind bitcoin is very innovative and is being closely watched by traditional financial institutions. The exchanges, on the other hand (with some exceptions), seem to have been built with little clue as to what preceded them in the financial space.

Filed under strategies